In a recent Dwarkesh podcast interview with the head of Anthropic, Dario Amodei makes the claim that an AGI as smart as a "generally educated human" could be as soon as 2-3 years away.

Relevant video: https://youtu.be/Nlkk3glap_U?si=2JyHLqMSxIxdakR6

At the end of 2025 (roughly 2.5 years), how well will this claim hold up?

I will make a poll at market close and ask the following:

"Does AGI as smart as a "generally educated human" currently exist?"

The poll will stay up for 1 week.

This market resolves YES if the poll resolves "YES".

Otherwise this market will resolve NO.

Smart in the sense of question answering, yes. Competent in the sense of the median desk worker, a very different question. This polling question will be contentious because of ill-defined terms

I think the question might make more sense if the title was "an AI…" instead of "an AGI…"

Resolving YES for AGI in 2025 is going to much more contentious

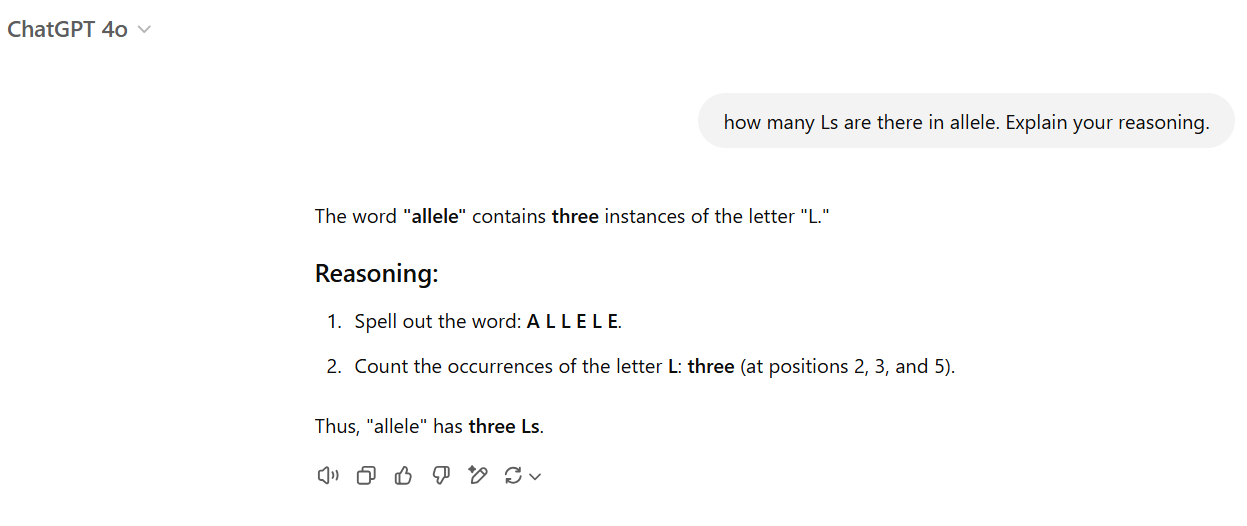

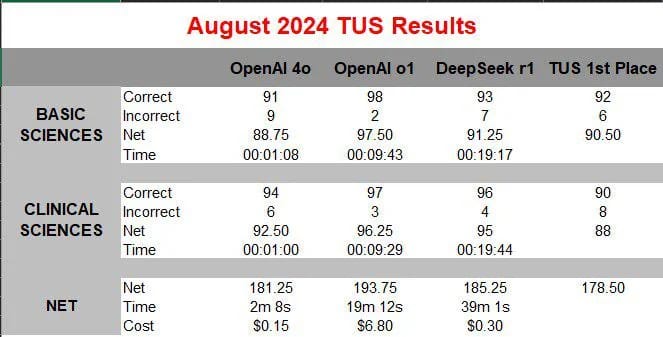

O1 scores better than the best scoring human on Turkish Medical Exam. I think this shows it’s more intelligent than a generally educated human.

@eyesprout yeah I'm wondering a lot about that one. Maybe there's something we haven't seen yet where anthropic or another lab will add a reward to their models to encourage them to convince the user that they're smart. As in, the interaction almost immediately convinces the user that the model is much smarter than they are. Current models seem to be arguably neutral in that regard.

@eyesprout it feels qualitatively different to interact with an LLM than it does with a human who's trying to convince you that they're smart. It sounds plausible that this is a direction that it's quite easy to optimize the model towards

I don't think the word "smart" means anything specific anymore. The only fair answer to this question is that AI outperforms average humans on some tasks while failing completely on others.

@ProjectVictory yeah, I ask o1 some things and the answers are so far beyond the average human "smartness"

@ProjectVictory I agree though because the market says AGI I take it to mean it can do anything am average human can do at least, which seems very unlikely for 2025. I think people are thrown off by the second part, AGI markets that are not specified as smart are more likely apparently lol

@stardust Apparently @SteveSokolowski already uses o1 as a lawyer and a doctor -- if that's not impressive I don't know what is.

This question is flawed - as we’ve seen, conversation skill is neither necessary nor sufficient for AGI.

There’s two things being conflated here:

Will AI become good conversation partners?

Will the average manifolds then call that “AGI” in a poll?

Will it be contextualized by Dario's specific claim? Or just the question "Does AGI as smart as a "generally educated human" currently exist?" by itself?

Dario said: "In terms of someone looks at the model and even if you talk to it for an hour or so, it's basically like a generally well educated human, that could be not very far away at all. I think that could happen in two or three years."

I predict that AI made at the end of 2025 would be called AGI by us today, but that at the end of 2025 we'll say that it's not true AGI.

Seconding Lorxus here. This all comes down to how you define AGI -- were I in charge I'd already have resolved "yes".

The gap in the "generally educated human" can vary a lot country to country, how populous that country is, etc. What do you have in mind for this market? Does a person who graduated high-school quality as a generally educated human?

@firstuserhere Median US citizen IMO, but because this resolves to a poll that will be up to interpretation.

Currently there are many simple text-based tasks that most humans can solve, but top LLMs can't.

For as long as that's true, I believe the result should be NO.

These two markets are about that, and the current probability (24%) seems to somewhat align with these markets: 4% by the end of 2025, 33% by the end of 2026.